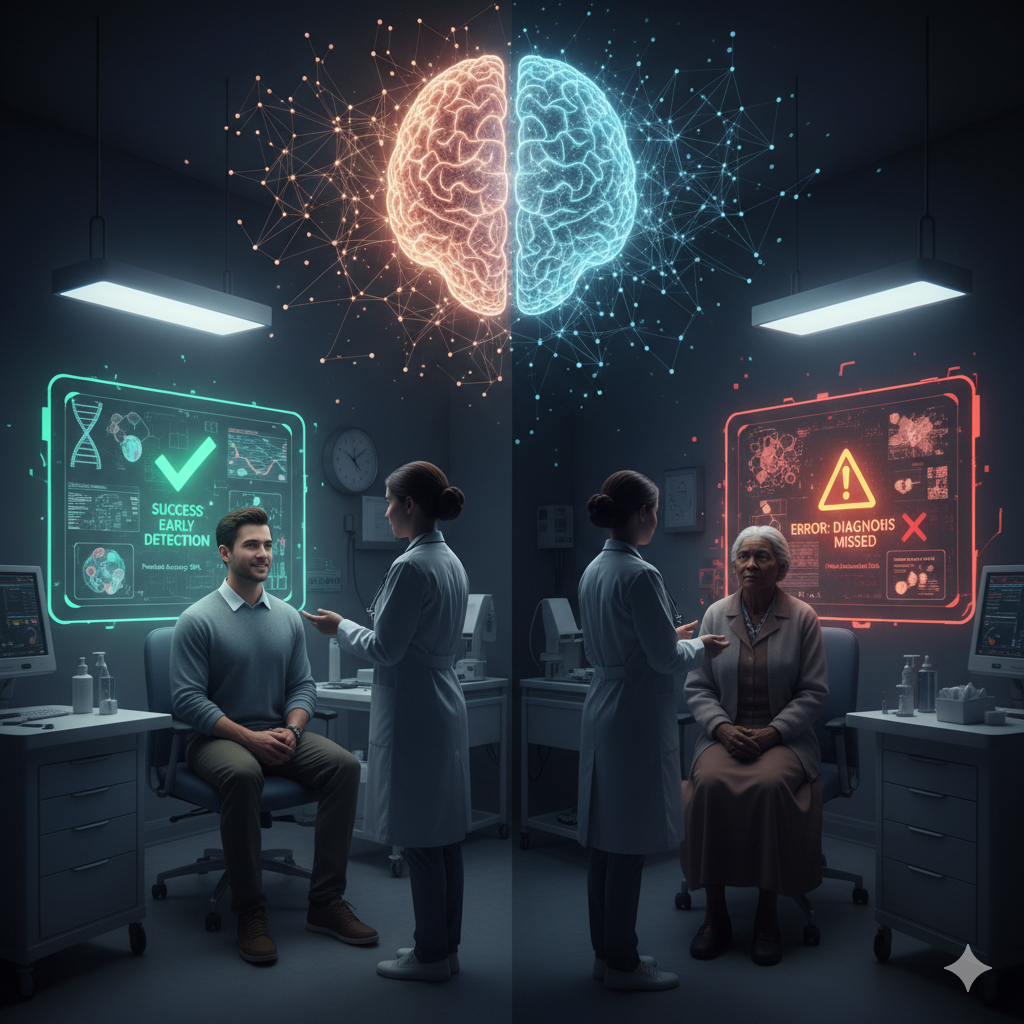

Artificial Intelligence (AI) is ushering in a medical revolution, offering incredible potential for rapid diagnostics, personalized treatment protocols, and optimized patient care. Yet, as AI systems are increasingly deployed in hospitals and clinics, a critical ethical challenge has emerged: algorithmic bias. This systemic issue causes AI to disproportionately disadvantage certain patient groups, often unintentionally amplifying the very social and health inequalities the technology was meant to solve.

The presence of bias is not a technical glitch; it is a reflection of the entire AI lifecycle. It begins with data bias, where training datasets lack diversity—be it in terms of race, gender, socioeconomic status, or even geographic origin. Imagine an AI designed to detect skin lesions. If its training data predominantly features images of lighter skin tones, its diagnostic accuracy for individuals with darker skin will inevitably plummet, leading to dangerous missed diagnoses. This is followed by algorithmic bias, embedded in the design choices. For instance, an algorithm developed to predict a patient’s need for complex health management might be trained using historical healthcare expenditure as a proxy for illness severity. This design can incorrectly flag wealthier, healthier patients—who use more expensive services—as needing more resources, while overlooking sicker, underserved patients whose low utilization translates to lower, misleading cost data. These biases ultimately exacerbate health disparities, eroding the fundamental principles of fairness and patient trust.

Mitigating AI bias demands more than just patching code; it requires a holistic, ethical commitment from business and society.

Healthcare professionals, developers, and administrators are the gatekeepers of ethical deployment. The development process itself must be interdisciplinary, including clinicians, data scientists, ethicists, and patient advocates from the very beginning. Once built, an AI model cannot be a “set it and forget it” tool. Continuous monitoring and validation are paramount. A model must be rigorously tested on a local patient population before widespread deployment to ensure its performance generalizes. Critically, a human-in-the-loop approach must be mandated: the AI should serve as a support tool, never replacing the final clinical judgment of a healthcare provider, who retains the authority to override a potentially biased algorithmic recommendation.

In the context of the Italian National Health Service (Servizio Sanitario Nazionale – SSN), AI bias can manifest in ways unique to its infrastructure and demographics, primarily revolving around the regional divide.

Consider an AI system designed for resource allocation or predictive risk scoring. Italy has a well-known disparity between remote and urban areas. If a predictive model is trained primarily on high-quality, dense data from a leading urban research hospital, it may become highly accurate for that specific context. When deployed in a rural clinic with a different case mix and fewer resources, the model may perform poorly, leading to inappropriate care recommendations and amplifying existing territorial disparities. All in all, AI-driven Large Language Models (LLMs) are increasingly used for monitoring chronic patients at home. If the training data for these models does not adequately represent the specific communication styles, common geriatric conditions, or complex health profiles of the Italian elderly, they may fail to detect critical changes in patient status. Moreover, the technological and digital divide among older citizens presents a major deployment bias, effectively excluding a vulnerable segment of the population that most needs continuous care from the benefits of the new technology. This implies that often addressing AI bias is a challenge of health justice. It demands an ethical commitment to continuous scrutiny and multi-stakeholder collaboration to ensure that technological advancement in medicine is a force for equity, not division.